How to fix too many open files problem in Linux

System Administrators might have encountered the problem of “Too many open files” when dealing with dedicated web servers. But you can see that the file exists on your server. Then what can be the problem? I have seen this problem recently in a ColdFusion server, so I am sharing my experience with you, how I fixed the issue, and what the cause was.

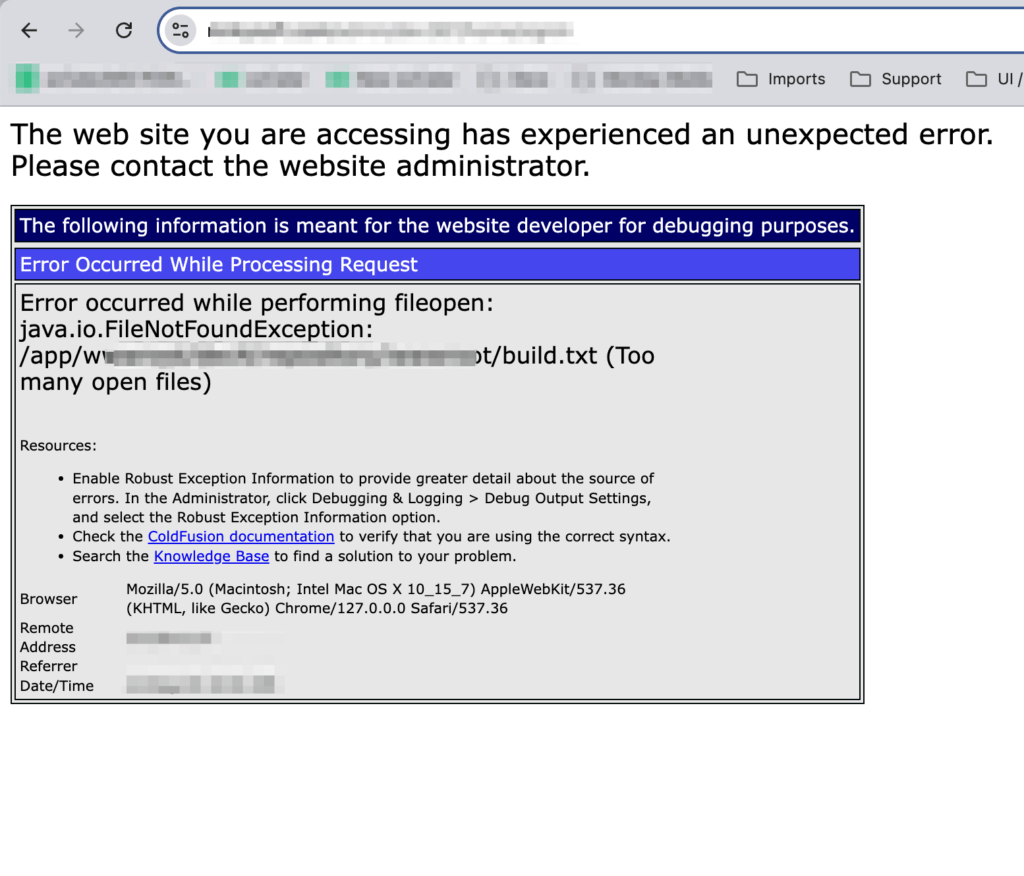

Suddenly, I received complaints from users that they were having a server issue. They also posted some images, like the one below. For security reasons, I have blurred some texts.

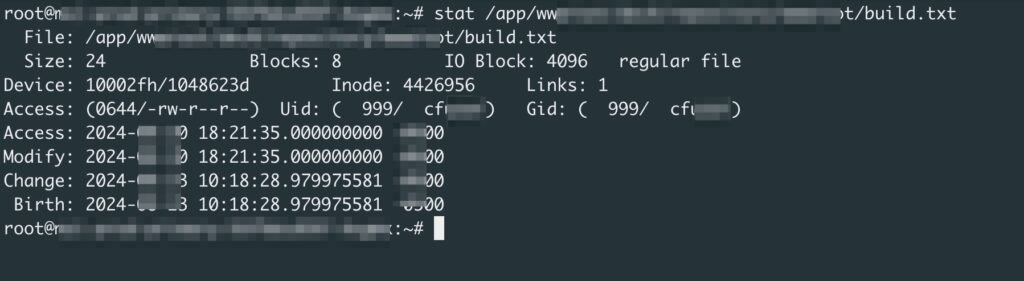

I checked the server and found the file. I also checked if the web server user had permission to open the file from the file or not. But I saw it was okay. You can try first with these commands.

stat <file path>And you will get a result like this screen:

You can see the file has access. But we still get the error “Too many open files.”. That means the problem is somewhere else. The web server is not able to open the file somehow.

After a couple of findings, I found that the web server process exceeded the File Descriptors limit.

What are the File Descriptors?

When any process runs, a handle is used to open a file, a socket, or any other I/O resource. You know, in Linux, Everything is a File. And there is a limit on how many files each process can open. This prevents a process from taking over all resources within an operating system so that the other processes in the system can function smoothly.

How do I check the limit?

You can use this command cat /proc/sys/fs/file-max to check the limit. You will get a number like 20000, the limit for a regular user to open files in a single session. However, there are two types of limits in the system: hard limit and soft limit.

Hard Limit vs Soft Limit

The Hard Limit is the maximum limit, and the Soft Limit is the current or practical limit. The soft limit cannot exceed the hard limit, and a normal user can change the soft limit. That means a normal user can increase the limit until it reaches the hard limit. But the root or super user can change the hard limit.

You can check the limit with these commands:

ulimit -Hnulimit -SnYou can also check the limits for other users, but you need to log in with the user’s permission. You can use these commands:

su - <AnotherUserName> -- bash

ulimit -Hn

ulimit -SnTo check the limit of service, first, find the process ID of the server with the command ps aux. Then run this command to find the limit: prlimit -p <ProcessID>

How many File Descriptors (FDs) are opened by a service?

First, find the process ID of your service with this command: ps aux

Then check all FDs opened by that process ID with this command: lsof -p <ProcessID>

You may get an extensive list, so you can use this command to get the number: lsof -p <ProcessID> | wc -l

If the number is reaching that limit anytime, you may need to increase the limit for File Descriptors. You can create a small bash script to log the count of open files and run that with a cron job.

How do we increase the limit?

You can increase the limit by running this command as a root privilege: sysctl -w fs.file-max=100000. I have used 100000 as the limit. And this limit is system-wide. But this change is not permanent. To make the change permanent, you can edit /etc/sysctl.conf or create a file under the folder named: /etc/sysctl.d/, with a “conf” extension. And put the content:

fs.file-max = 100000Some systems may not support the sysctl command. There, you can follow the user-specific settings. To set a limit per user, edit this file: /etc/security/limits.conf

To set a soft limit of 10,000 and a hard limit of 100,000 for the username wwwuser, add these lines:

wwwuser soft nofile 10000

wwwuser hard nofile 100000You need to restart your service to take this effect. Then you can verify the limit again with the command as mentioned earlier: prlimit -p <ProcessID>.